One of our core products is 360° spin generation for cars, delivering a studio-like turntable finish that gives dealerships polished, professional visuals without the need for a controlled shoot setup. It’s designed to work seamlessly with a wide range of inputs: from videos and images captured in any background (studio, outdoor, or edited) to even very limited photo sets.

However, in practice, dealerships often provide fewer than the standard eight images needed for a smooth spin. This creates noticeable gaps that disrupt the viewing experience, a common challenge when not all angles are captured during a shoot or when only older inventory images are available and reshooting isn’t an option.

To address this, we introduced a feature that can reconstruct missing views at the eight primary angles: 0°, 45°, 90°, 135°, 180°, 225°, 270°, and 315°. By automatically detecting and generating the absent views, the system ensures a complete, fluid 360° spin. This helps dealerships present their cars more consistently, enhance the online browsing experience, and engage potential buyers with greater confidence.

Our Solution

This challenge highlighted the need for a method that could intelligently detect missing views and generate them based on the visual information available in the existing images so that the generated frames closely match how the actual views would have appeared.

When evaluating potential solutions, we found that existing diffusion models tended to hallucinate details and introduce creative variations that don’t exist in the real car. While this imaginative flexibility is ideal for artistic or open-ended generation tasks, it becomes a limitation in our case, where accuracy, consistency, and brand fidelity are non-negotiable. Our goal isn’t to invent new appearances, but to generate how the missing view of the same car would have actually looked from that specific angle. To achieve this, we developed a custom diffusion pipeline designed to work closely with the provided reference images and be tightly conditioned on the target 3D pose (or viewing angle).

This ensures that every generated view stays faithful to the car’s true geometry, texture, and lighting, producing results that align with real-world visuals. In addition, our model integrates the stabilization techniques already used in our core 360° spin product, allowing the generated views to blend seamlessly with real ones resulting in a smooth, studio-like spin that feels natural and professionally captured.

Spin without generated views

Spin with generated views (2 new views at 45° and 315°)

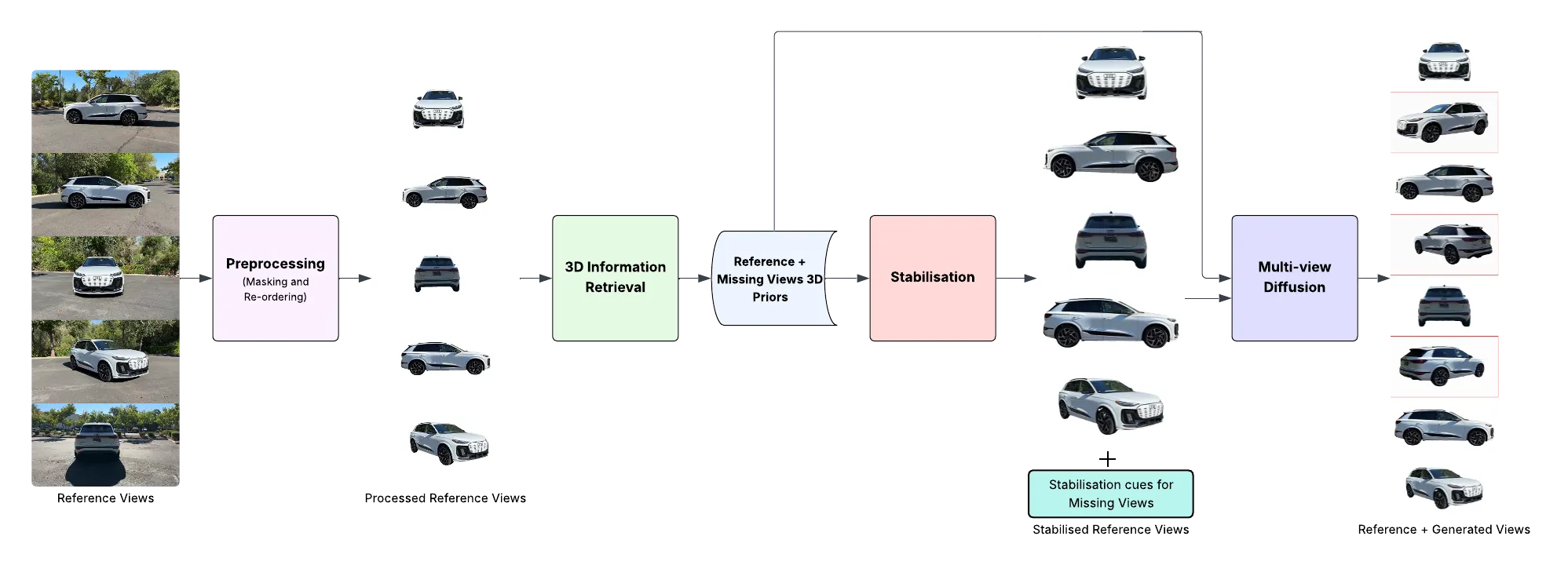

Flowchart depicting the process behind missing view generation for 360 spin

Step-by-Step Process Behind Missing View Generation

1. Preprocessing

Inputs often arrive unordered, with cars photographed in various environments. Our in-house segmentation models and angle-determination algorithms mask the cars and reorder the reference images into a consistent 360° flow.

2. Extraction of 3D Priors

This step creates a “digital skeleton” of the car a guiding structure that helps the system understand its shape and orientation. These priors ensure the generated views integrate seamlessly with the existing ones.

3. Determining Missing Views

Using preprocessing results and extracted 3D priors, the system identifies which viewing angles are absent and extrapolates 3D priors for those missing views to guide the generation process.

4. Stabilization

Adapted from our core spin product, this process stabilizes the existing reference frames and determines the essential stabilization cues for the new, generated views.

5. Multi-View Diffusion

The diffusion model, conditioned on reference frames, 3D priors, and stabilization cues, generates the missing views in sequence.

6. Image Postprocessing and Spin Generation

Once all eight views are complete, image-level postprocessing gives the frames a uniform, studio-grade finish across the entire spin.

Training and Model Development for Missing View AI

1. Data Preparation

We curated training sets of eight vehicle images each (across categories such as sedans, SUVs, hatchbacks, jeeps, pickup trucks, trucks, and buses) captured at the primary angles. Backgrounds were masked out so the model could focus solely on the car’s 3D structure. Stabilization cues were also derived and applied.

2. Training

During training, instead of using all eight images, we randomly sampled subsets of size n-1, n-2, or n-3 (with n = 8) as reference images. The remaining images served as ground truth. The model was tasked with generating the missing views, and the loss was computed against these ground-truth images.

This sampling strategy helped the model learn to accurately generate outputs across all possible angles even when only a few reference views are available.

We monitored progress using quantitative metrics such as LPIPS, PSNR, and SSIM to evaluate both fidelity and perceptual quality during validation.

Validation LPIPS, PSNR , SSIM during training

The final average PSNR, SSIM, LPIPS scores achieved by our model on a test set comprising of various vehicle types:

| LPIPS | PSNR | SSIM |

| 0.13 | 17.5 | 0.81 |

3. Validation Results

Inferred Model Output (Left) vs. Ground Truth (Right)

Model Performance and Results

Our model can generate a complete set of eight frames when provided with five to seven reference frames.

7 → 8 Frames:

Sample 1

Input Views (n=7) – Top row | Output Views (n=8) – Bottom row, stabilized and new frame generated at 315°

Final Turntable Output:

Sample 2

Input Views (n=7) – Top row| Output Views (n=8) – Bottom row, stabilized and new frame generated at 225°

Final Turntable Output:

6 → 8 Frames:

Sample 1

Input Views (n=6) – Top row | Output Views (n=8) – Bottom row, stabilized and new frame generated at 45°, 135°

Final Turntable Output:

Sample 2

Input Views (n=6) – Top row | Output Views (n=8)- Bottom row, stabilized and new frame generated at 135°, 315°

Final Turntable Output:

5 → 8 Frames:

Sample 1

Input Views (n=5) – Top row | Output Views (n=8) Bottom row, stabilized and new frame generated at 45°, 225°, 315°

Final Turntable Output:

Sample 2

Input Views (n=5) – Top row | Output Views (n=8) Bottom row, stabilized and new frame generated at 45°, 225°, 315°

Final Turntable Output:

Future Improvements for Higher Quality Spins

1. Improving Detail and Small Features in Generated Views

The current pipeline occasionally struggles to capture fine details such as small text, tire patterns, or small logos, especially when they appear at low resolution in the reference images. This limitation stems from compressing 3D priors into a lower-dimensional latent space, which can lead to the loss of subtle details.

To improve this, we’re exploring:

- Newer diffusion backbones with stronger detail preservation.

- Variants of VAEs optimized for maintaining low-level visual fidelity.

Logo in the original reference view vs in the generated view

Tyre in the original reference view vs the generated view

2. High-Resolution 360° Spin Generation

At present, our model generates images up to 1024 × 1024 resolution on a 24 GB VRAM GPU. Some clients, however, require 4K outputs. We aim to enable high-resolution generation within the same hardware limits and inference times, a key focus for upcoming releases.

Conclusion: Spyne’s AI-Powered Complete 360° Car Spins

By combining intelligent view detection, 3D priors, stabilization, and a custom-conditioned diffusion pipeline, Spyne’s missing-view generation ensures complete, professional-grade car 360° spins even from incomplete photo sets. This innovation helps dealerships maintain consistent visual standards, improve digital showroom experiences, and boost buyer confidence without any need for reshoots.