Automotive photography is essential for dealerships in the 21st century. Around 69% of buyers find good car visuals critical while searching for vehicle options online, while 26% consider them moderately important.

Additionally, vehicle photography with traditional (manual) methods is slow, tedious, and expensive. That’s where the power of AI (artificial intelligence) can do wonders for you, automating a portion of the task and guiding you through the rest. The first step to that is car detection. Once the AI performs that, image editing becomes a breeze!

Let’s understand what car object detection is in the context of automated image editing and what happens after.

What is Car Detection?

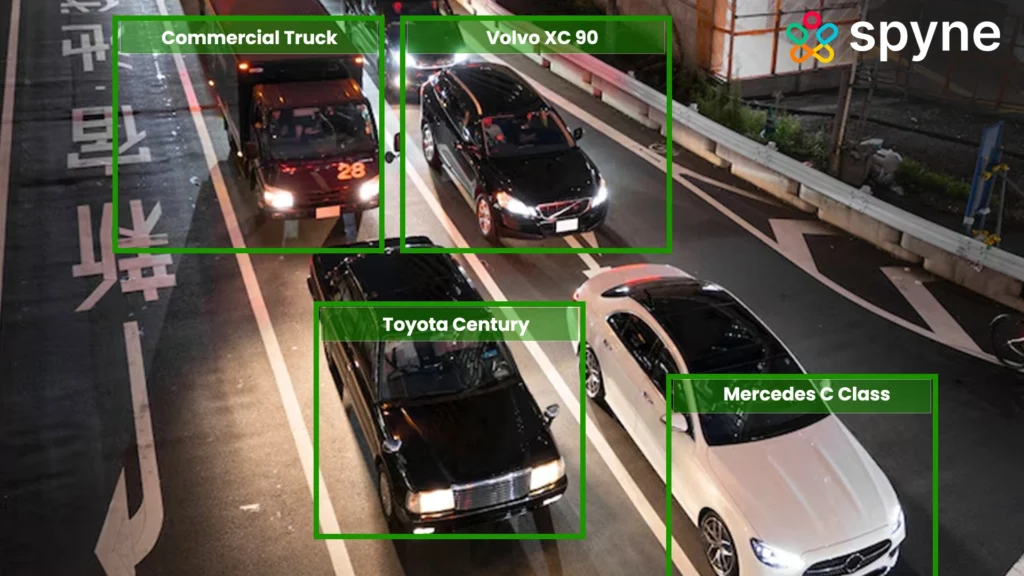

Car detection, often known as vehicle detection in photos or videos, is made possible by these advanced AI technologies. These techniques use information derived from visual images to identify autos using computer vision and machine learning models.

For example, a car detection dataset can be implemented in training via AI tools to differentiate between vehicle and non-vehicle objects. When trained, they can perform tasks such as vehicle identification, car part segmentation, and damage assessment with remarkable precision.

Not only do car detection systems bring together useful applications in automotive photo shoots and car inspections but they also help in processes related to the replacement of image background, beautification, and uniform catalog construction. The latest vehicle-detection systems also integrate contemporary technologies like AI car recognizer and car-identification pictures to enhance user satisfaction with the business process.

Vehicle Detection Sensors and Systems

The system uses a combination of sensors and artificial intelligence to provide it with the precise determination of results. The sensors include LiDAR, ultrasonic, and infrared; all capture detailed information about a car’s structure and the environment around it. AI algorithms decode these data points to determine a vehicle, comment on its state, and even identify certain details such as type or damage to a car.

Key components of such systems are,

- Ultrasonic Sensors: Helps to detect near objects, especially for parking assistance.

- LiDAR Sensors: High-resolution mapping is needed to identify vehicle contours and dimensions.

- Infrared sensors: Capture heat signatures in a vehicle, which works well in low-light situations or at night.

When combined with car identifiers and recognition systems equipped with AI, the effectiveness of the sensors is dramatically enhanced.

What is Car Damage Recognition?

The Car Damage Recognition system quickly detects and analyzes vehicle damage using an API with computer vision and machine learning algorithms. These deep learning algorithms can automatically spot a car, identify its parts using a sensor for car detection, assess damage, and perform vehicle damage tagging. With parallel machine learning and analysis with car crash detection, this whole process takes just a few seconds for AI car recognition to identify its parts and then provide an estimate of the damage and possible repair costs using collision detection in cars.

Machine learning algorithms for car recognizers can be retrained using a customer’s data set and car collision detection information, then provided either on-premises or as a SaaS solution. This approach allows the API to be customized to fit specific business requirements, making it a seamless tool for automating claims processes.

Choosing the Right Computer Vision Model For You

There are a variety of computer vision models available to choose from. Computer vision platforms can cover a wide range of open-source datasets and models that provide their users access to thousands of datasets and models for their needs. We will cover two major computer vision models: a computer vision model that detects damage and another model that detects car parts.

A Computer Vision Model that Detects Damage

This model is trained to detect any damage to any area of a car. It is trained on multiple images of different types of damage like broken parts, scratches, and dents. Thus, this model can detect and differentiate between various types of damage easily.

A Computer Vision Model that Detects Car Parts

This model is trained to detect the various parts that constitute a car. It is trained on multiple images of different car parts and to segment these individual car parts within an image including central components such as windows, doors, bumpers, and lights for both the rear and front of the car. It can also differentiate AI car detectors and other niche parts of the car like mirrors, tailgate, and hoods.

These computer vision models utilize ML algorithms that are trained for vehicle inspection to detect damage and differentiate between car parts.

How Does a Vehicle Detection System Work?

By using Car Damage Recognition, businesses can replace the slow, manual process of handling and approving claims with faster machine learning algorithms and analysis systems. The solution speeds up data processing, reducing company costs on human resources, preventing fraud by over 80%, and significantly improving image data analysis. It works on-site, guiding users to meet photo requirements.

This section describes the main structure of a vehicle detection system and counting system. The process follows a sequence of steps to automatically identify and locate AI vehicle recognition within a given area using image or video analysis. Here’s an overview of the steps involved:

1) Data Acquisition

The car detection system initiates by capturing video data of the traffic scene using cameras, sensors, or similar monitoring devices. Therefore, this car detection video data becomes the primary input for the vehicle detection system.

2) Road Surface Extraction and Division

After acquiring the video data, the system proceeds to extract and define the road surface area within each frame. Additionally, it segments the road surface to concentrate vehicle detection on the relevant area. Therefore, this extracted road surface is then divided into smaller sections or grids to enable efficient vehicle counting and tracking.

3) YOLOv3 Object Detection

As the heart of the vehicle detection and tracking process, the YOLOv3 deep learning object detection method comes into play. YOLOv3 (You Only Look Once version 3) is a cutting-edge algorithm specifically designed for real-time object detection. Additionally, it leverages the power of deep neural networks to accurately and swiftly detect various objects, including vehicles, within complex scenes.

YOLOv3 employs a grid-based approach, dividing the input image into a grid of cells. For each cell, the algorithm predicts bounding boxes that tightly enclose the detected objects. Additionally, YOLOv3 provides class probabilities for the predicted objects, allowing it to distinguish between different types of vehicles present in the traffic scene.

4) Vehicle Tracking and Counting

To ensure accurate vehicle counting and tracking, the system employs tracking algorithms. These algorithms use the information from YOLOv3’s detections to maintain a consistent identity for each detected vehicle across consecutive frames. Additionally, we commonly use Kalman filters or SORT (Simple Online and Real-time tracking) techniques to track vehicles’ movement.

5) Counting and Analysis

We then accurately count the tracked vehicles. They either enter or exit specific regions of interest within the scene. These regions could include entry and exit points of highway segments or designated monitoring areas. Additionally, by analyzing the trajectories and interactions of the detected and tracked vehicles, the system can provide insights into traffic flow, congestion, and other relevant metrics.

6) Output Visualization

The final step involves visually representing the results. Therefore, the system generates annotated video frames with bounding boxes encompassing detected vehicles, labels indicating their types, and trajectory paths illustrating the tracked movement of vehicles. Additionally, this output serves as a valuable resource for real-time monitoring, traffic management, and further in-depth analysis.

In conclusion, a vehicle detection and counting system incorporates a structured process that starts with video data input, followed by road surface extraction and division. The YOLOv3 deep learning object detection method performs AI vehicle recognition in highway traffic scenes. Through vehicle tracking, counting, and visualization of results, these systems contribute to efficient traffic management and informed decision-making.

Car Detection Model of Spyne

Spyne’s car detection image processing model takes things further. Our AI-powered editing platforms include a web browser application — named Darkroom — and a smartphone app for iOS and Android. Both Darkroom and the smartphone app offer automated image editing, with the latter additionally offering AI-guided photoshoots.

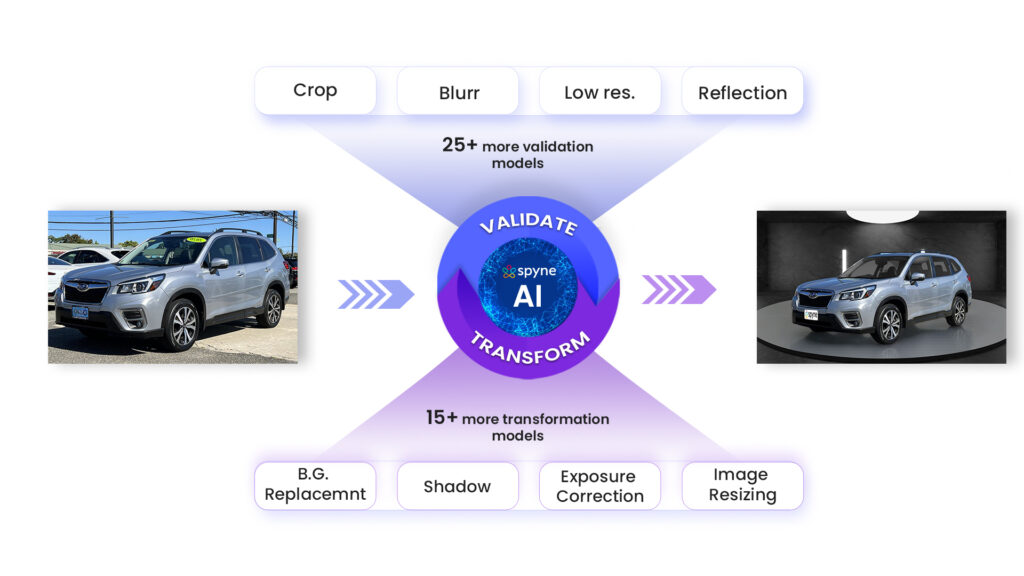

Any image you upload on Spyne is checked by 35+ individual APIs to give you the best image for your digital car catalogs. Additionally, depending on your requirements, you can use our platform as-is or with individual APIs. You can also use our software development kit to build your white-label app.

Steps for Car Detection and Classification

Spyne’s AI-powered vehicle detection and classification offers several features. Let’s explore what Spyne’s AI car detector does:

Car Classifier

It performs car object detection, verifying whether the object in your image is a car or not. This AI vehicle recognition is a built-in feature of Spyne’s virtual car photography studio and is also available as a separate API.

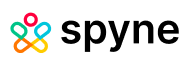

Angle Detection

This feature detects the angle of the car relative to the camera.

Crop Detection

It detects if the image has been cropped.

Distance Detection

This API automatically detects the car’s distance in the image from the camera photographing it.

Exposure Detection

This feature checks the brightness of the image.

Watermark Detection

Check if there are any watermarks on the image.

Car Type Classifier

It classifies the car as per Spyne’s vehicle classification chart – sedan, SUV, hatchback, and pickup truck.

Location Classifier

This detects the location of the car by classifying the location as outdoors or indoors and in the studio.

Number Plate Detection and Extraction

This API detects the number plate of the car object.

Antenna Detection

This detects if the car has an antenna on it or not.

Car Part Detection

This detects the various parts of the car such as its tires, side mirrors, headlights, front grille, and door handle.

Car Key Point Detection

This identifies the key features and their locations on the car such as the side mirror and the door handle.

Car Shoot Category Classifier

This system categorizes the car image, identifying whether it’s an interior shot, exterior shot, or something else entirely.

Focused Exterior Shot Extraction

This automatically captures and extracts focus shots of the exterior of the car from the images provided to it.

Interior Sub Classes Classifier

It detects the different interior features, such as an odometer, dashboard, seat, steering wheel, and infotainment system in the image.

Profanity Classifier

This detects if the image contains anything profane and points it out.

Tint Classifier

It detects if the car’s windows have been tinted or not.

Blur Score

This detects the amount of blurring in the car image and rates it by giving it a score.

Dent and Damage Detection

This performs car dent detection and detects any other damage to the vehicle’s exterior.

Banner Detection

This detects if the car image has a banner or not.

Tire Detection

Check whether the object in the image is a tire.

Mirror Classifier

This AI car detector detects and identifies rear view mirrors in the car images.

Car Cleanliness Detection

This API checks if the car body is clean or has any mud on it.

Tilt Classifier

Analyze the image to see if the car is tilted to one side.

Tire Mud Classifier

Check to see if there is mud on the car’s tires in the photograph.

Reflection Classifier

Check to see if there is any reflection on the car, like of a nearby object such as a tree or electric pole.

Doors Position Detection

This feature checks if the car doors are open.

Wipers Position Detection

This API analyzes the image to determine if the vehicle’s wipers are raised.

Window Segmentation

This car identifier by picture identifies and outlines windows in the car images.

See-Through Parts Segmentation

This segments those parts of the car that one can see through.

Number Plate Segmentation

This performs number plate recognition and isolates the number plates of the car.

Tire Segmentation

This car identifier by picture identifies and segments the tires of the car.

Window Masking Transformation

Masks the car windows in the image to remove reflections.

Window See-Through Masking

Check if the car in the image has see-through windows.

Number Plate Masking

This feature masks the car’s number plate in the image, replacing it with a custom virtual plate.

Exposure Correction

Performs correction of the image brightness, automatically correcting bright or dark images.

Reflection Correction

This feature corrects the reflections on the car’s body in the picture.

Car Reflection – Mirror Floor

This transforms the floor into a mirror-like polished surface.

Car Reflection – Synthetica

This generates a dark shadow and a strong reflection of the car.

Car Reflection – Marble Floor

This generates a dark shadow and a medium reflection of the car.

Car Reflection – Kryton Floor

This generates a transparent shadow and a light reflection.

Soft/Hard Transparent Shadow

This generates and modifies shadows.

Exterior Background Removal

Removes the original background from the car’s exterior shots.

Car Seat Generator

This generates car seats in images, even if they don’t exist or are not visible in the original.

Window Fill

This fills in the missing parts of the windows.

Car Edge Refinement

This AI car identifier checks the car outline and refines its edges.

Interior Background Removal

Removes background from the car’s interior shots.

Miscellaneous Background Removal

Removes background from all other pictures of the vehicle.

Floor Generation and Shadow Options

Generates a virtual floor beneath the car to give the image a realistic look.

Logo Placement

This feature assists in placing your dealership’s logo in the image.

Tire Reflection

This creates a reflection of the tires on the virtual floor for a realistic look.

Tilt Correction

This corrects the tile of the car in the image.

Interior Background Removal

This isolates the interior car components by removing the background of the car interior in the image.

Watermark Removal

This removes any watermarks from the photograph.

Wall Replacement

This changes the wall of the image while retaining the floor.

Banner Creation

This creates the banner image for an online car listing.

Diagnose Image

This feature checks if there is any issue with the image’s aspect ratio, size, and resolution.

Super Resolution

Increases image resolution as per your dealership website/marketplace requirements.

Aspect Ratio

This feature uses super-resolution to increase or decrease the image’s aspect ratio per your needs.

DPI

This feature uses super-resolution to increase or decrease the DPI (Dots Per Inch) to meet your needs.

Additional Parameter Correction

Corrects the image’s aspect ratio, size, and resolution.

Video Trimmer

Generates frames from a 360° car video to create an interactive 360° spin view.

Object Obstruction

Check if there is any object obstruction before the car leading to hampered visibility.

Blurry, Stretched, Or Distorted Images

This API checks if the car or the image is blurry or doesn’t adhere to the quality standards.

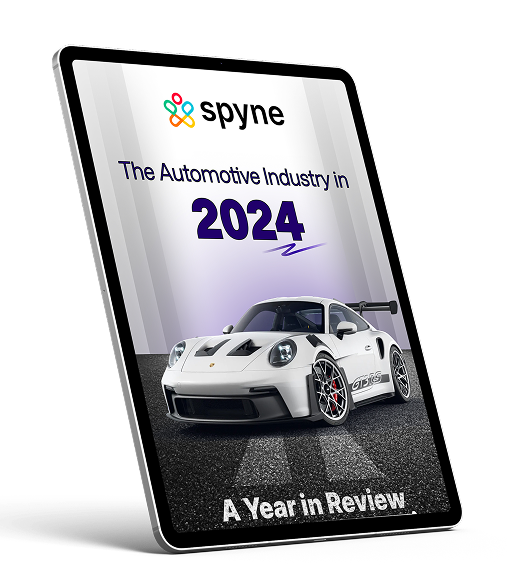

Real-World Use Case of Car Object Detection

Spyne’s app can get studio-quality images for your digital showrooms. Therefore, the app has a guided photoshoot telling you what angle of the vehicle to capture. Additionally, the automatic validation feature checks the photo and tells if it can be edited or if you need to reshoot it.

Upload the image to the virtual studio and select what edits you want, whether it is background replacement, window tinting, number plate masking, logo placement, etc. Moreover, the virtual studio uses car detection image processing to determine where the background lies in the image, how to remove and replace it, etc. The system uses car recognizers that can find car models by picture once recognizing a vehicle in an image, the system can also edit the windows, number plates, tires, etc. Therefore, through AI, you can edit images in bulk within seconds, and the system remembers your settings to give you a consistent-looking catalog throughout.

Car Damage Detection cuts down on the time and effort needed for manual inspections and supports smarter decision-making for the following businesses:

1) Insurance Companies: Prevents fraud in 80% of cases and significantly speeds up the underwriting process.

2) Car Rental Services: Lowers operational costs, boosts customer satisfaction, and increases retention rates.

3) Car Repair Services: Promotes collaboration and adds transparency to both the repair process and cost estimates.

Benefits & Features of Spyne AI Car Detection

Let’s look at the benefits of Spyne’s AI car damage detection photo enhancer technology to create high-quality AI car identifier by picture for your online car inventory:

- It is Quick: Faster turnaround time is a dream for all retailers. Moreover, AI photo editing software makes these dreams come true by being able to process hundreds of photographs in seconds.

- It is Cost-efficient: AI photo enhancers will help you save a lot of money. It not only saves the cost of the workforce to edit the pictures but can even help you save money spent on car photography.

- Allows Bulk Editing At Once: When your expectations for a professional car photoshoot collide with the disappointing background, it’s time to dive into a marathon editing session or even consider reshooting! With AI editing, this is only a matter of a few minutes using a car identifier by photo system!

- Accuracy and Consistency: AI editors come to the rescue by eliminating and changing the background, changing colors, adding shadows, and meeting every other editing demand required. While the accuracy and consistency with manual editing rely on the editor, in the case of AI photo enhancers, you can get 100% accuracy without the chance of human mistakes or inconsistency in the edited photos.

- Full Range Detection: Spyne AI car recognition system can detect a wide range of damages across various parts made from different materials like metal, rubber, fiber, glass, and plastic.

- Severity Score: We also provide a severity score to measure the severity of the damages incurred by the car.

- Interior Damage Prediction: Based on the damage to the exterior, we can predict the probable detection of damage to the internals of the car.

- Ease of Use: Photos of the damage can be captured using smartphones making the car damage inspection process easier.

Car Detection System: Impact and Future

Computer vision is transforming how car damage is assessed. With improvements in computer vision, insurance companies can use it to detect problems and speed up repairs. For instance, right after an accident, computer vision can assess the damage immediately and help insurers make quicker decisions on what to do next.

This also benefits consumers. Instant damage assessments can make the claims process smoother. You could just take pictures at the accident scene and send them through your insurer’s app. Car detection AI would analyze the photos, classify the damage, and estimate repair costs. This streamlined process leads to faster repairs and quicker claim resolution.

What is the Best Alternative to Computer Vision Models for Car Damage Detection?

While computer vision models are extremely effective, alternative solutions such as Spyne hybrid systems that can incorporate vehicle detection sensors with traditional inspection techniques are gaining considerable traction among the public. Hybrid models rely on advanced sensors that collect real-time data to be analyzed through AI.

These systems can achieve unparalleled accuracy for car damage detection, parts, and anomalies by combining sensor data and AI vehicle recognition. This approach is very suitable for processes that need decisions to be made in an instant. For example, accident claim processing. In addition, integrating AI car detectors and recognizers with other methods means the entire approach to vehicle inspection is holistic.

Conclusion

Online viewers can have an attention span comparable to that of a goldfish. Without innovative car visuals, attracting their attention and converting them into buyers would be impossible. Moreover, trying to produce high-impact visuals through manual processes brings numerous bottlenecks. Spyne’s automated car photo shoot begins with car detection and ensures next-gen vehicle photography without hassles.

Still, have doubts or want to know more about how Spyne can help you automate vehicle photography? Book a demo, and we’ll give you a detailed explanation and demo.